On January 29, VCCorp, the National Cybersecurity Association, and TikTok Vietnam co-hosted a forum titled “Safe Tet (Lunar New Year), No Deepfake Fears”, focusing on the growing threat of deepfake technology and its role in modern scams.

The session dissected the mechanics of deepfakes, the latest fraud tactics, and emerging cybercrime trends. Real-world cases were analyzed - ranging from cloned voices and faces used to impersonate family members, to fake calls and messages posing as businesses or government officials.

Experts highlighted how scams have become particularly manipulative during festive seasons like Tet, when emotions run high and people are more likely to fall for urgent messages about fake promotions, gift deliveries, or prize winnings.

Speaking at the event, Vu Duy Hien, Deputy Secretary General and Chief of Office of the National Cybersecurity Association, noted that while online fraud remains a serious issue, recent data shows promising signs of improvement.

The association’s 2025 survey found that online scam victims accounted for just 0.18% of individual users - down from 0.45% in 2024. That’s roughly one victim per 555 users. The decline reflects increased public awareness and collective efforts by authorities, tech companies, the media, and civil society groups.

Deepfake fraud: More deceptive, more dangerous

Despite the encouraging trend, experts warn that scams are evolving - not disappearing. Among the most alarming developments is the misuse of deepfake technology to forge faces, voices, and entire identities, making it increasingly difficult for users to distinguish between real and fake.

The association issued several urgent warnings, particularly with Tet around the corner:

First, users should no longer rely on images, voices, or video as reliable proof of identity. Any request involving money transfers, account verification, or personal data - no matter how familiar or official it seems - should be double-checked.

Second, scammers often use time pressure. Deepfake scams are designed to create a sense of urgency that prevents rational decision-making. Pausing to verify through official channels or directly contacting the supposed sender is critical.

Third, protecting your personal data is vital. Deepfakes are only convincing when trained on real content. Oversharing voice clips, selfies, or personal details on unknown platforms can unwittingly fuel future scams.

Fourth, financial transactions should only happen through official platforms. Avoid following custom instructions or clicking on random links. If anything seems suspicious, stop immediately and contact your bank or the relevant platform.

As artificial intelligence develops at lightning speed, cybersecurity is no longer just about strong passwords - it depends on user habits, critical thinking, and the ability to spot red flags. Experts stressed the need for awareness training and practical tools to counter the rising tide of AI-enabled deception.

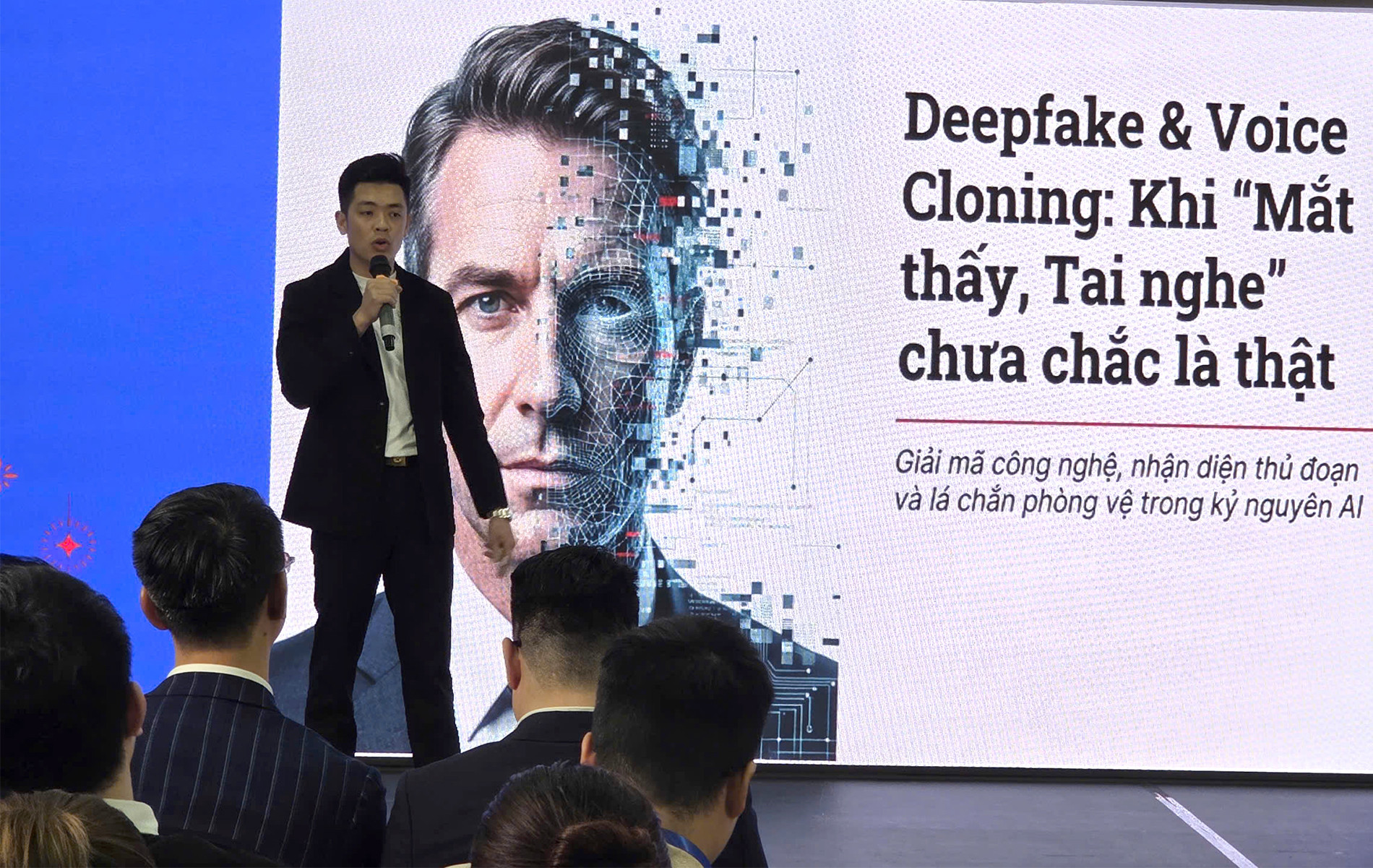

“When what you see and hear isn’t real”

“Deepfake & voice cloning: Seeing and hearing are no longer believing,” said Vu Duy Hung, founder of Hung AI Creative. According to him, deepfakes are becoming one of the most severe threats in the AI era, blurring the boundary between authenticity and manipulation.

Modern AI tools can now fabricate highly convincing fake content - images, voices, videos - that are almost indistinguishable from the real thing. By the first quarter of 2025, global losses from deepfake scams had reached $200 million, with attacks occurring every five minutes.

“We can’t stop technology from advancing,” Hung said, “but we can upgrade our inner filters.” He suggested three defensive habits: slow down - don’t act under fear; verify - always confirm through secondary channels; and protect - keep your biometric and personal data secure.

The psychological traps behind deepfake scams

The forum also explored the psychological and social reasons why people fall for deepfakes, particularly during emotional or high-stress moments like year-end holidays.

Assoc. Prof. Tran Thanh Nam, Vice President of the University of Education at Vietnam National University, explained: “Living in an age of information overload creates cognitive blind spots. These gaps in perception make young people especially easy targets.”

According to him, several psychological patterns contribute to this vulnerability:

A rush for speed, driven by FOMO (fear of missing out), leads to poor emotional control and risk judgment.

Herd mentality causes people to believe false evidence just because others do.

Lack of critical thinking, digital financial literacy, and a craving for recognition make users susceptible to deceptive flattery and social engineering.

As Tet approaches, experts stress that no matter how advanced the technology gets, the best defense remains human awareness.

Hai Phong