“Right after receiving a message on a social network platform from my long-time neighbor P. to borrow money for business purposes, I trustfully sent him the requested sum”, recalled D. sadly. In the afternoon of the same day, D. discovered that criminals had already taken over P.’s Facebook account to deliver various similar messages to his friend list, aiming at scamming them. When D. decided to call P.’s family and other friends for confirmation, he realized he had fallen into the trick of those cyber criminals and lost money.

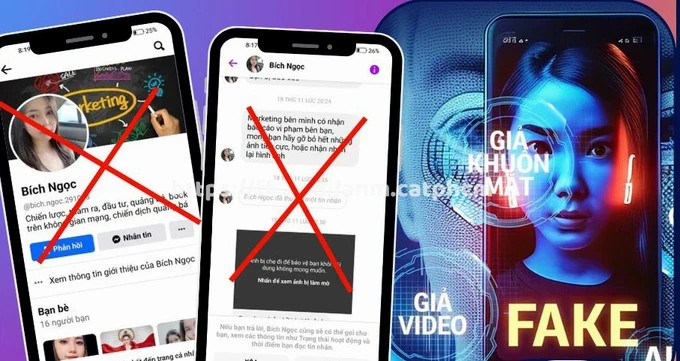

Lately, the police at various localities have repeatedly warned the community about fraudulent ways to appropriate money on the Internet via Deepfake technology – an AI application to create fake content using voices, faces, and simple actions with high precision.

People with malicious intention are using this Deepfake technology to make calls on social network platforms like Zalo or Facebook. They impersonate victims’ relatives or friends and try to borrow money for emergency use.

In order to do this, these criminals usually seek and collect personal information, images and videos of social network users that are posted publicly. The Deepfake technology will be then implemented to create new content, normally with unclear sounds or even flickering images so that it is harder for victims to check the authenticity of that content. When a victim wants to verify information via a call, these criminals refuse to answer or use software to extract voices and images for further deceit.

Obviously, the toughest challenge at present in this matter is the ability to identify cunning scams exploiting both Deepfake and ChatGPT technologies.

The two above inventions are also used harmfully to fake images and video clips of famous people. For instance, in December 2023, singer P.M.C. had to publicly deny her appearance in a sensitive clip made by Deepfake while sending a report to functional agencies for handling.

More seriously, on a number of accounts and channels against the Party and the State of Vietnam, there are images, audios, and clips using Deepfake to propagandize issues against the Vietnamese law or even to fabricate stories about the current socio-economic and political status in Vietnam, which is hard for the public to distinguish.

Cyber security experts informed that there is currently no mechanism or technology to completely eliminate scamming via Deepfake. Therefore, the community should equip themselves with updated knowledge on this matter. When receiving a voice message from an acquaintance, especially to borrow money, it is necessary to use another authentication method pre-defined between the two partners to confirm the truthfulness of the content.

Certain features to help identify whether a clip is using Deepfake or not are flickering movements of body, continuously changing lighting or skin color between video frames, inconsistency between mouth movements and voice, and no eye blinking.

In related news, banks all over the country have lately received reports on credit card fraud via QR codes. When card owners scan these QR codes, they are taken to a fake bank website. Hence, after inputting their authentication details, they accidentally have their bank account taken over. These harmful QR codes can be attached to an email or a message without being filtered.

The Authority of Information Security has warned that bank users be more cautious when scanning a QR code posted in public places or sent via social network messages and emails. They should check whether the linked website begins with the prefix ‘https’ and a familiar domain name or not. Confidential information like bank or social network account names should not be shared publicly.

SGGP